GPU Deployment

22nd CCP PET-MR sftwr frmwrk mtng

Casper O. da Costa-Luis

Biomed. Eng. & Im. Sci.

KCL, St Thomas' Hospital, London SE1 7EH

20 Sept 2018

Overview

- hardware and software

- desktop, cluster, or cloud

- docker

- nvidia-docker

Hardware and Software

- CPU

- AMD GPU

- OpenCL 2

- NVIDIA GPU

- --> CUDA (C++ abstraction) <--

cuFFT,cuBLAS,cuDNN

- OpenCL 1.2 (v2.0 beta)

- --> CUDA (C++ abstraction) <--

CUDA

- Most widespread

- Better scientifically supported

- ~30% faster than OpenCL

- But proprietary and tied to NVIDIA

CUDA

Desktop

- Hardware (NVIDIA GPU)

- OS (Win, UNIX, Android)

- Graphics Driver

- CUDA toolkit (C++ libraries and

nvcccompiler)

Cluster

- Can target multiple GPUs

cudaSetDevice(1); // sets current GPU to #2- Unified virtual addressing (UVA)

cudaMemcpyPeerAsync()- Shared host memory (via CPU/RAM)

- P2P memcopies

(shortest device-to-device PCIe path)

cudaDeviceEnablePeerAccess();

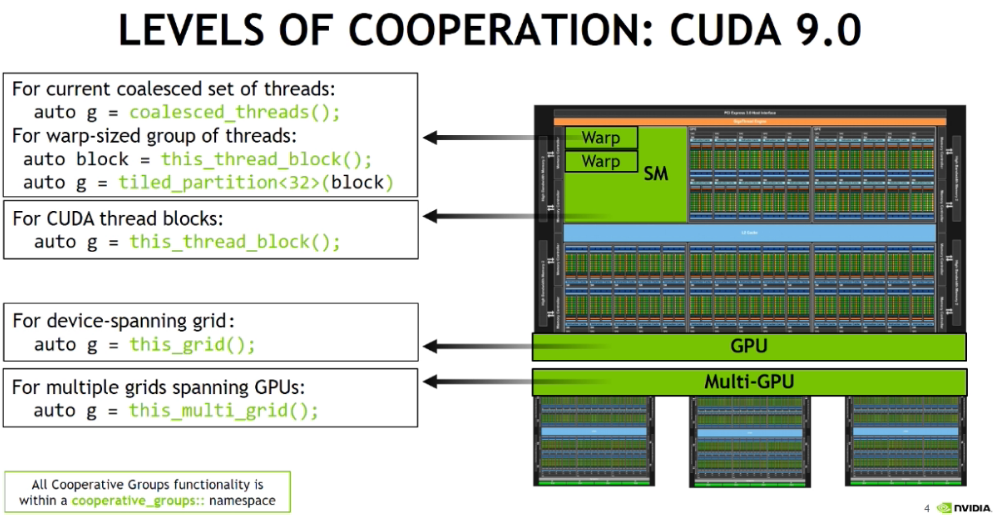

cudaDeviceCanAccessPeer();CUDA 9

http://on-demand.gputechconf.com/gtc/2017/video/s7622-perelygin-robust-scalable-cuda-parallel-programming-model.mp4

NVIDIA Collective Communications Library (NCCL)

- Multi-gpu, multi-node communication collectives

all-gather,all-reduce,broadcast,reduce,reduce-scatter

- Automatic topology detection to determine optimal communication path

- PCIe, NVLink high-speed interconnect

- Support multi-threaded and multiprocess applications

- Support for InfiniBand verbs, RoCE and IP Socket internode communication

Wrappers

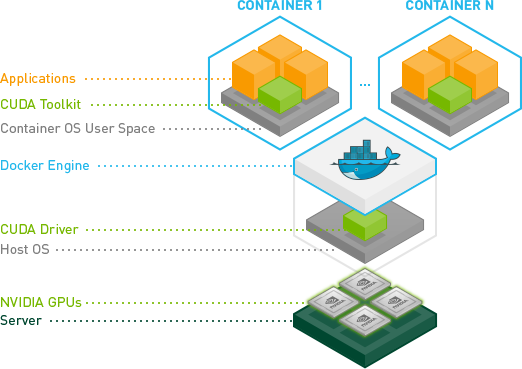

Docker

What is Docker

- Low-overhead, container-based replacement for virtual machines (VMs)

https://github.com/CCPPETMR/SIRF/wiki/SIRF-SuperBuild-on-Docker

docker-compose up --no-start sirf

docker start -ai sirfLinux

Possibility to get CUDA support within the container

- share

/dev/nvidia*!

Cloud

NVIDIA GPU Cloud (NGC)

- Pre-built repository of containers with

CUDA toolkit & commonly-used apps - Can pull images onto own machine or cloud providers

Cloud Providers

- One-click deploy container(s)/cluster(s)

- customisable GPU/CPU/RAM/SSD

- Google Cloud

https://cloud.google.com/gpu/ - Amazon AWS EC2 P*

https://aws.amazon.com/ec2/instance-types/p3/

Example Deployment

Methods

Explicit instructions for:

- Local Ubuntu Native

- dependency hell

- Docker Ubuntu Host

- less painful (probably maybe)

Local Ubuntu Native

- Install CUDA drivers

- Install CUDA toolkit https://developer.nvidia.com/cuda-90-download-archive

- Install extra libraries (e.g.

cuDNN,NCCL, ...) https://developer.nvidia.com/ - Realise some applications require a different toolkit version

- Install the other version

- Stress about pointing applications to the wrong version

- Install CUDA drivers

$ sudo add-apt-repository ppa:graphics-drivers/ppa

$ sudo ubuntu-drivers autoinstall- Install CUDA toolkit https://developer.nvidia.com/cuda-90-download-archive

# Assuming 9.0 (9.2+ not yet supported by e.g. `tensorflow`)

$ wget http://developer.download.nvidia.com/compute/cuda/repos/ubuntu1604/x86_64/cuda-repo-ubuntu1604_9.0.176-1_amd64.deb

$ sudo dpkg -i cuda-repo-ubuntu1604_9.0.176-1_amd64.deb

$ sudo apt-key adv --fetch-keys \

http://developer.download.nvidia.com/compute/cuda/repos/ubuntu1604/x86_64/7fa2af80.pub

$ sudo apt-get update

$ sudo apt-get install cuda-9-0Docker Ubuntu Host

- Install CUDA drivers

- Install

docker

https://docs.docker.com/install/linux/docker-ce/ubuntu/ - Install

nvidia-dockerhttps://github.com/NVIDIA/nvidia-docker/ - Pull and Run

https://ngc.nvidia.com/registry/

Install

docker

https://docs.docker.com/install/linux/docker-ce/ubuntu/$ curl -fsSL \ https://download.docker.com/linux/ubuntu/gpg | \ sudo apt-key add - $ sudo add-apt-repository \ "deb [arch=amd64] \ https://download.docker.com/linux/ubuntu \ $(lsb_release -cs) stable" $ sudo apt-get update $ sudo apt-get install docker-ce

- Install

nvidia-dockerhttps://github.com/NVIDIA/nvidia-docker/

# Add the package repositories

curl -s -L https://nvidia.github.io/nvidia-docker/gpgkey | \

sudo apt-key add -

dist=$(. /etc/os-release;echo $ID$VERSION_ID)

curl -s -L \

https://nvidia.github.io/nvidia-docker/$dist/nvidia-docker.list | \

sudo tee /etc/apt/sources.list.d/nvidia-docker.list

sudo apt-get update

# Install nvidia-docker2 and reload the Docker daemon configuration

sudo apt-get install -y nvidia-docker2

sudo pkill -SIGHUP dockerd- Pull and Run

https://ngc.nvidia.com/registry/

# Download image complete with CUDA toolkit and cuDNN from NGC

$ docker pull nvcr.io/nvidia/cuda:9.0-cudnn7.2-devel-ubuntu16.04

# Alias

$ docker tag nvcr.io/nvidia/cuda:9.0-cudnn7.2-devel-ubuntu16.04 \

nvidia/cu90dnn72

# Test `nvidia-smi`

$ docker run --runtime=nvidia --rm nvidia/cu90dnn72 nvidia-smi